H100, Quantum-2 and the library updates are all part of NVIDIA’s HPC platform — a full technology stack with CPUs, GPUs, DPUs, systems, networking and a broad range of AI and HPC software — that provides researchers the ability to efficiently accelerate their work on powerful systems, on premises or in the cloud.

“AI is reinventing the scientific method. Learning from data, AI can predict impossibly complex workings of nature, from the behavior of plasma particles in a nuclear fusion reactor to human impact on regional climate decades in the future,” said Jensen Huang, founder and CEO of NVIDIA. “By providing a universal scientific computing platform that accelerates both principled numerical and AI methods, we’re giving scientists an instrument to make discoveries that will benefit humankind.”

Azure First to Offer NVIDIA Quantum-2 for HPC Workloads

Microsoft Azure adoption of the NVIDIA Quantum-2 InfiniBand networking platform follows news of NVIDIA Quantum-2’s general availability, announced at GTC in March.

“The future of transformative enterprise technologies such as AI and HPC is in next-generation cloud platforms like Microsoft Azure, where innovators have the opportunity to deliver a new era of technological breakthroughs,” said Nidhi Chappell, general manager of Azure AI Infrastructure at Microsoft. “The NVIDIA Quantum-2 InfiniBand networking platform equips Azure with the throughput capabilities of a world-class supercomputing center, available at cloud scale and on demand, and allows researchers and scientists using Azure to achieve their life’s work.”

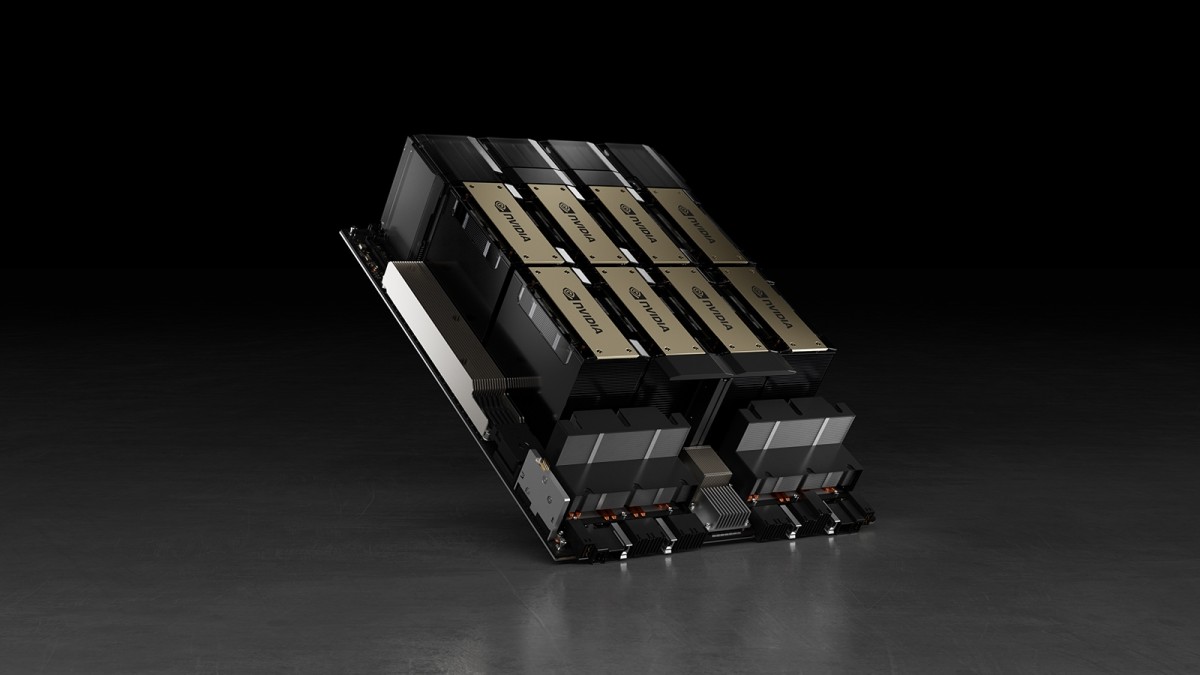

Dozens of New Servers Turbocharged With H100, NVIDIA AI

ASUS, Atos, Dell Technologies, INGRASYS, GIGABYTE, Hewlett Packard Enterprise, Lenovo, Penguin Solutions, QCT and Supermicro are among NVIDIA’s many partners that are announcing H100-powered servers in a wide variety of configurations.

A five-year license for NVIDIA AI Enterprise, a cloud-native software suite that streamlines the development and deployment of AI, is included with every H100 PCIe GPU. This ensures organizations have access to the AI frameworks and tools they need to build H100-accelerated AI solutions, from medical imaging to weather models to safety alert systems and more.

Among the wave of new systems is the Dell PowerEdge XE9680, which tackles the most demanding AI and high-performance workloads. This is Dell’s first eight-way system based on the NVIDIA HGX™ platform, which is purpose-built for the convergence of simulation, data analytics and AI.

“AI is propelling innovation unlike any technology before it,” said Rajesh Pohani, vice president of portfolio and product management for PowerEdge, HPC and Core Compute at Dell Technologies. “Dell PowerEdge servers with NVIDIA Hopper GPUs support customers to push the boundaries and make possible new discoveries across industries and institutions.”

Major Updates to Acceleration Libraries

To help boost scientific discovery, NVIDIA has released major updates to its CUDA, cuQuantum and DOCA acceleration libraries:

1. NVIDIA CUDA libraries now include a multi-node, multi-GPU Eigensolver enabling unprecedented scale and performance for leading HPC applications like VASP, a package for first-principles quantum mechanical calculations.

2. The NVIDIA cuQuantum software development kit for accelerating quantum computing workflows now supports approximate tensor network methods. This allows researchers to simulate tens of thousands of qubits, as well as automatically enables multi-node, multi-GPU support for quantum simulation with unparalleled performance using the cuQuantum Appliance.

3. NVIDIA DOCA, the open cloud SDK and acceleration framework for NVIDIA BlueField DPUs, includes advanced programmability, security and functionality to support new storage use cases.

These libraries enable researchers to scale across multiple servers and equip them with massive performance boosts to drive scientific discovery. The NVIDIA HPC acceleration libraries are available on leading cloud platforms AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure.