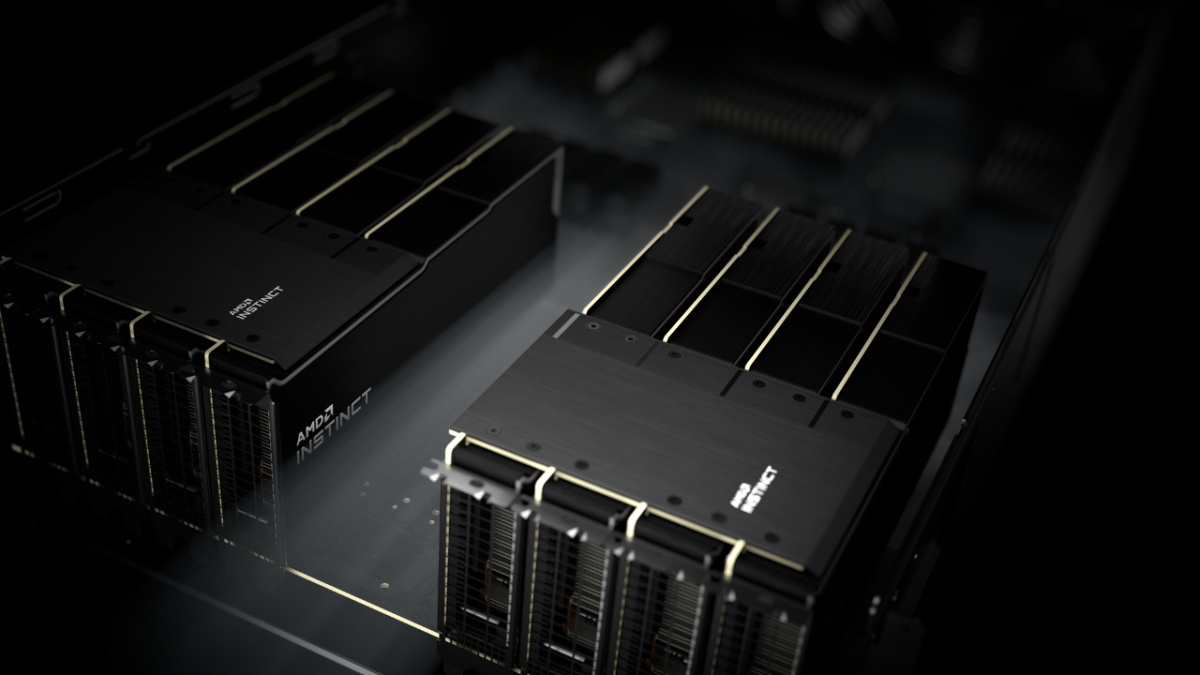

AMD and Microsoft have continued their collaboration in the cloud, with Microsoft announcing the use of AMD Instinct™ MI200 accelerators to power large scale AI training workloads. In addition, Microsoft announced it is working closely with the PyTorch Core team and AMD data center software team to optimize the performance and developer experience for customers running PyTorch on Microsoft Azure and ensure that developers’ PyTorch projects take advantage of the performance and features of AMD Instinct accelerators.

“AMD Instinct MI200 accelerators provide customers with cutting-edge AI and HPC performance and will power many of the world’s fastest supercomputer systems that push the boundaries of science,” said Brad McCredie, corporate vice president, Data Center and Accelerated Processing, AMD. “Our work with Microsoft to enable large-scale AI training and inference in the cloud not only highlights the expansive technology collaboration between the two companies, but the performance capabilities of AMD Instinct MI200 accelerators and how they will ultimately help customers advance the growing demands for AI workloads.”

“We’re proud to build upon our long-term commitment to innovation with AMD and make Azure the first public cloud to deploy clusters of the AMD Instinct MI200 accelerator for large scale AI training,” said Eric Boyd, corporate vice president, Azure AI, Microsoft. “We have started testing the MI200 with our own AI workloads and are seeing great performance, and we look forward to continuing our collaboration with AMD to bring customers more performance, choice and flexibility for their AI needs.”

The AMD Instinct MI200 accelerators join other AMD products being used at Microsoft Azure including the newly announced Azure HBv3 virtual machines which use 3rd Gen AMD EPYC™ processors with AMD 3D V-Cache™ Technology, and numerous other instances for confidential computing, general purpose workloads, memory bound workloads, visual workloads and more.